One of the biggest challenges in operating a forklift is the driver’s limited view of his surroundings, especially when storing and retrieving load carriers or transporting bulky loads. Various camera and assistance systems are used on the vehicles to support the driver and his surroundings and increase the operational safety of the vehicle. However, control elements and screens for displaying job data, vehicle parameters or the playback of reversing or fork cameras, as used on most vehicles today, also represent an additional visual restriction.

A new approach is being pursued by scientists at the Institut für Integrierte Produktion Hannover (IPH) gGmbH and the Institute of Transport and Automation Technology (ITA) at Leibniz University Hannover in the joint research project ViSIER – Virtual vision enhancement and intuitive interaction through Augmented Reality on industrial trucks (IGF project 20158 N).

Their goal is to implement an operator assistance system for forklifts that uses augmented reality (AR) to compensate for the driver’s restricted vision caused by vehicle components and the load.

Surrounding area survey

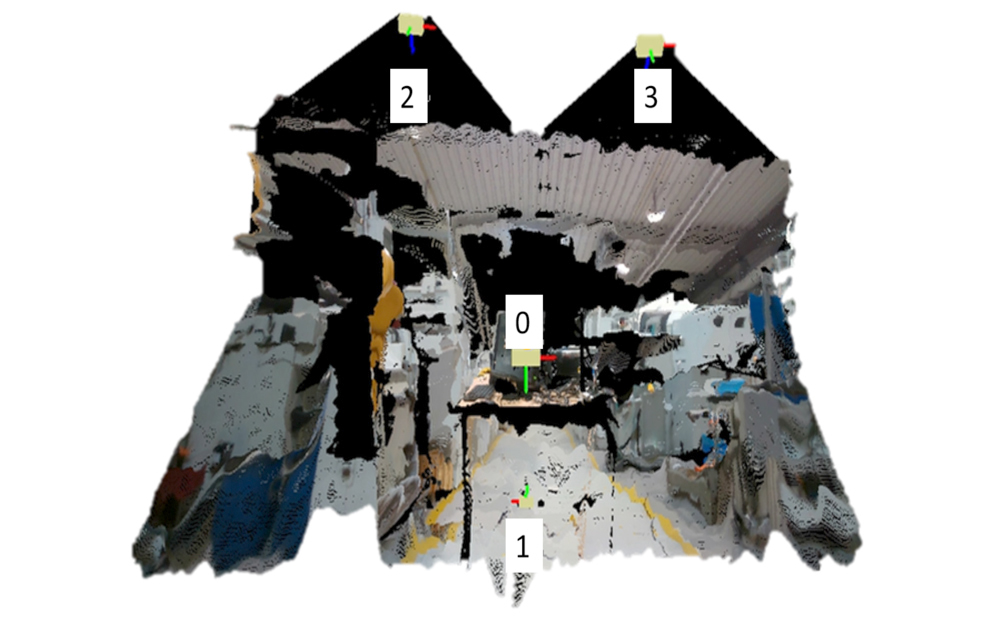

The environment recording represents the essential basis for the assistance system. For this purpose, ITA has strategically mounted RGB-D cameras on a demonstrator vehicle. The demonstrator, a Linde E16 counterbalanced truck, was provided by Kion.

The RGB-D cameras provide the color values for each pixel as well as the corresponding depth information. A Field Programmable Gate Array (FPGA) is integrated in the camera, this takes over the conversion of the USB interface to the Ethernet standard used in the industry. Power is supplied by means of Power-over-Ethernet (PoE). A voltage converter was connected to the forklift battery to supply the PoE switch required for this.

In cooperation with the company Vetter, ITA has developed forks with integrated cameras. Various adapters allow the variation of the angle of inclination of the cameras during the project.

Camera calibration

For environmental imaging, the position and orientation (pose) of the cameras in a reference coordinate system is required. This pose is determined image-based in a calibration process. The calibration process is divided into an on/offline process. The offline calibration process includes an intrinsic calibration of the individual cameras as well as an initial extrinsic calibration of the camera systems.

ITA has selected and implemented the algorithms for the initial calibration. For the calibration, images of the calibration patterns are taken in different positions and orientations. Here, the respective pattern must always be seen by a defined base camera, which represents the reference coordinate system, and the camera currently to be calibrated. By assigning the image features, the pose of the respective camera with respect to the base camera is determined.

In addition to the initial extrinsic calibration, a dynamic extrinsic calibration is required. This is necessary because the pose of the camera systems in relation to each other can change, for example, when raising the forks or tilting the lift mast. The dynamic extrinsic calibration is to be based on the principle of visual odometry.

Position determination

The position of the operator’s head or the AR glasses is determined by camera, using the internal cameras of the AR glasses. IPH has placed markers in the forklift in such a way that at least one is always detected by the camera of the AR glasses. The software must then detect and identify the markers. From this, the pose of the markers relative to the camera can be calculated. The position of the markers relative to the forklift coordinate system is known, so that transformation matrices can be set up and used for coordinate transformation into the reference coordinate system.

In the demonstration forklift, 3D-printed adapter plates had to be used to create planar surfaces to ensure reliable detection of the markers. Using multiple smaller markers on a plate instead of one large one proved to be beneficial for robustness of detection. In addition, the markers must have a sufficiently large contrast to the background. While the detection of the markers runs stably, the axes are sometimes placed incorrectly, which also causes the calculated pose to be incorrect. To weed out the incorrectly oriented axes, allowable ranges for translation in the direction of each axis in the forklift coordinate system and for rotation around these axes were defined according to the driver’s freedom of movement.

View Constraint Compensation

The algorithm implemented by ITA uses 2D image data for sight restriction detection. The vision-restricting components are detected by matching color information from the operator’s field of view with color information from a previously determined corresponding area of a reconstructed scene. For the actual view restriction compensation, a mask-based overlay with information from the reconstructed scene takes place in the next step.

In a further step, IPH implemented a marker-based view restriction detection. The detection of visibility constraints is performed using QR codes placed inside the truck. Based on these codes, the alignment takes place an overlay with a 3D model of the truck by defining the sight-restricting components.

Next steps

ITA and IPH are currently working on merging the partial results. The assistance system will first be tested in the ITA test hall. This will be followed by validation at the project partner China Logistic Center in Itzehoe.

The aim of the research project is to give forklift drivers a clear view – with the help of AR glasses, they can easily see through obstacles.

Further information on the project can be found at visier.iph-hannover.de.