With the ongoing miniaturization of technical components and developments in the field of optical technologies, the importance of micro assembly and its automation is constantly increasing. Micro assembly processes require a high level of process knowledge due to the high accuracy and quality requirements. Automation therefore requires not only precise robots but also a large number of sensors.

Such automation solutions are very complicated, time-consuming to program and not very adaptable. Up to now, programming has been carried out directly on the robot, usually in the so-called joint space. This means that all movements have to be programmed directly on the robot and necessary coordinate transformations (e.g. from the camera system to the gripper system) have to be carried out manually.

Intuitive robot programming

In recent years, new, intuitive programming methods have considerably simplified the use of robots for process automation. This means that even people without a high level of specialist knowledge can integrate new robot applications into their production and increase productivity. These intuitive programming methods are essentially based on abstracting textual programming. This means that users do not need to master a programming language, but instead create the robot program from easily understandable function blocks (e.g. moving, gripping).

Although these programming methods are widely used in industrial robotics, they are rarely found in micro assembly. Offline programming and assembly simulations are also not widespread. The particular challenges of automation in micro assembly lie in the expert knowledge required and the complicated system structure. This makes both programming and simulation more difficult.

Framework for precision assembly

The Institute of Assembly Technology and Robotics (match) at Leibniz University Hannover is currently researching intuitive and (partially) autonomous programming methods for micro assembly. The researchers aim to support the user systematically in the programming of micro assembly processes. This starts with increasingly simple programming and extends to support through autonomous processes.

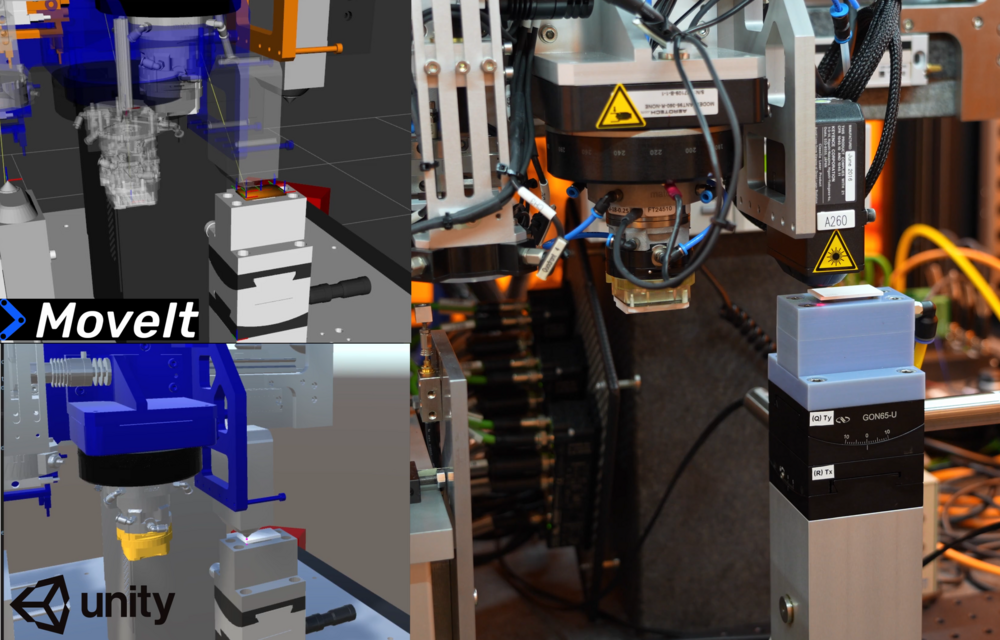

This includes both robot programming and the use of sensor technology (such as image acquisition and laser measurement systems). The starting point is the micro assembly system at match, for which a ROS2 framework was developed. ROS2 (Robot Operating System) is an open operating system for robots that provides a library for robot programming independent of the hardware. One example of a tool within this framework is Moveit2, which offers functions for path planning. The system is also simulated in Unity, a platform that enables physical simulations as well as game development. This enables both a simulation of the assembly processes and offline programming with a seamless transfer to the real system.

A central approach for intuitive programming is so-called skills, i.e. universal function blocks that map all the necessary skills for micro assembly processes. These skills are hardware-independent and can be used in different systems as long as they are ROS2-compatible. The user can program the assembly process with these skills. The skills can also be combined with artificial intelligence methods to implement autonomous processes.

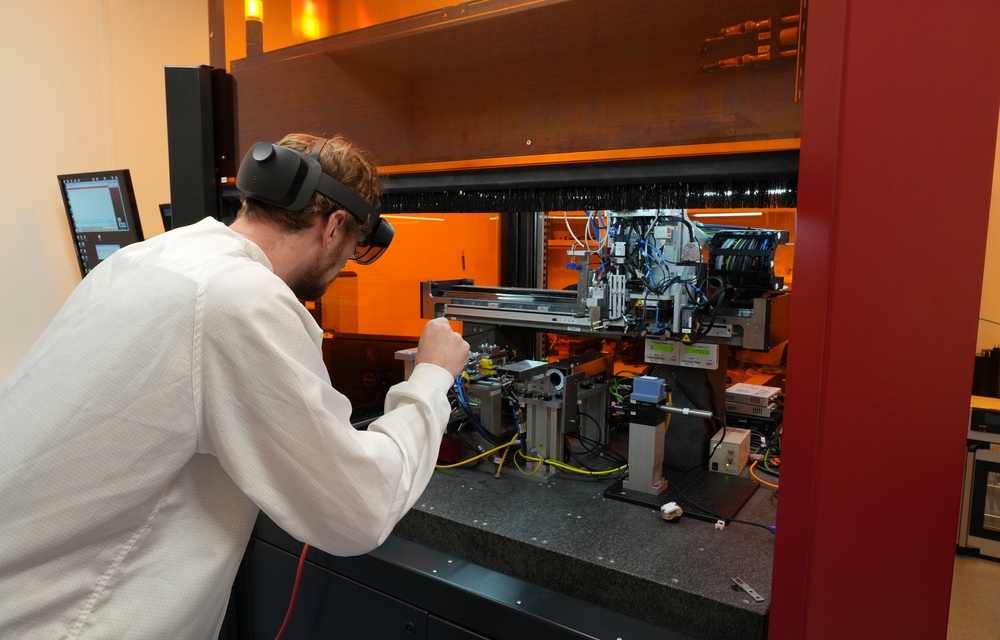

Making process knowledge visible with AR and VR

In addition to simulation, representing the micro assembly system in Unity offers the advantage that augmented reality (AR) and virtual reality (VR) applications can be easily implemented. AR and VR offer an intuitive human-machine interface and can be used in the context of precision assembly processes to visualize processes and, in particular, process knowledge (e.g. gripping points of components). In addition, processes that take place in the micrometer range can be presented to the user and process understanding can be increased.

The research work and approaches described at match thus help to simplify the automation of micro assembly processes and make them accessible to people without expert knowledge.